How Generative AI is Transforming the Way Developers Work?

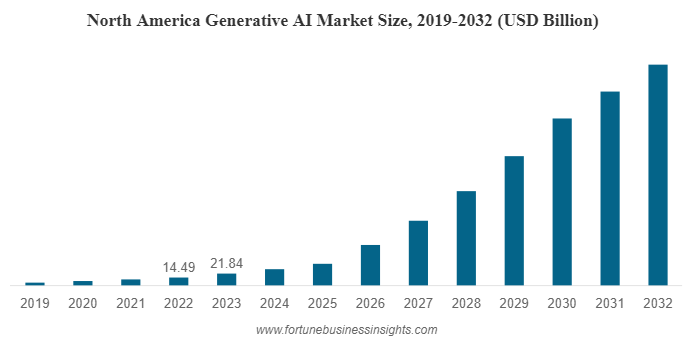

The Generative AI market is set to explode from $67.18 billion in 2023 to an astounding $967.65 billion by 2032, surging at a yearly growth rate of 39.6%.

There are many enterprise-level use cases for Generative AI that have picked the interest of software developers and entrepreneurs alike. By now, you’ve already witnessed how Generative AI tools such as ChatGPT, DALL-E, and GitHub Copilot have made people sit up and take notice. From content creation to streamlining tasks across coding, project management, and development - businesses are keenly exploring how Generative AI helps spur innovation, streamlines operations, improves customer experiences, and betters products and services.

But, “how is generative AI changing the way developers work?” This blog will explore the impact of generative AI on software development, and how it influences the future of coding strategy and execution.

What is Generative AI? What is Generative AI used for?

Generative AI is a type of artificial intelligence that creates new content, including text, pictures, videos, tunes, and even software code. Generative AI in software development understands patterns through advanced neural networks and deep learning, and then produces fresh, original content.

Generative AI serves various purposes.

- It can do standard AI tasks like pattern recognition

- But it also generates completely new works.

- Artists, developers, and businesses use it to craft text, graphics, movies, datasets, software code, and to translate languages.

For instance, developers no longer have to spend hours searching for code snippets online. Through coding with AI, they can simply ask for code examples in plain language, get code completed automatically, or convert code from one language to another. GitHub's Copilot is a real-world tool that uses this AI to suggest code snippets directly in a coding environment.

- Through the power of machine learning and neural networks, Generative AI empowers developers to automate and enhance various aspects of their work, from code generation and documentation to design and testing.

- With the ability to analyze vast amounts of data, Generative AI models can generate code snippets, propose solutions to complex problems, and even suggest improvements to existing codebases. This not only accelerates the development process but also reduces the likelihood of errors and enhances the overall quality of the code.

- Generative AI allows developers to explore new design possibilities and create unique user experiences. By training AI models on large datasets of images, developers can generate original designs, intelligent interfaces, and realistic prototypes.

Here’s how Generative AI works:

- Training: Generative AI starts with a foundation model—a deep learning model trained on massive amounts of raw, unstructured data. For text generation, large language models (LLMs) are commonly used. During training, the algorithm predicts the next element in a sequence (e.g., the next word in a sentence) and adjusts itself to minimize the difference between its predictions and actual data.

- Tuning: The foundation model is tailored to a specific Generative AI application. For example, fine-tuning it for code generation or image creation.

- Generation, Evaluation, and Retuning: The model generates content based on user prompts. It’s evaluated, and adjustments are made to improve quality and accuracy over time.

Design and Development of Generative AI Coding Tools

- Foundation Models:

- Generative AI tools start with a foundation model. The most common one today is the large language model (LLM), like GPT-4. These models are trained on massive amounts of raw data, learning patterns from text, code, and other content.

- LLMs serve as the “brains” for generative AI. They understand context, syntax, and semantics, making them ideal for creative content generation.

- Natural Language Prompts and Context:

- Users interact with generative AI using natural language prompts. For example, you might describe a coding problem or ask for a specific function.

- The model uses this prompt along with existing code (if available) to understand the task. It “reads” the context and infers what’s needed.

- Content Generation:

- Armed with context, the model generates content. It can suggest code snippets, entire functions, or even paragraphs of text.

- The magic happens by combining learned patterns with creativity. The model predicts what comes next, refining its output step by step.

- GitHub Copilot and Similar Tools:

- GitHub Copilot, developed by GitHub and OpenAI, is a prime example. It’s built on the foundation of GPT technology.

- Copilot lives inside integrated development environments (IDEs) and assists developers. It suggests code, completes functions, and even writes comments.

- By understanding natural language descriptions, Copilot bridges the gap between human intent and code execution.

Why Developers Should Care About Large Language Models?

- Improved Code Quality:

- LLMs can suggest code snippets, complete functions, and even write comments.

- By leveraging their vast knowledge of programming languages, they enhance code quality by catching errors, suggesting best practices, and improving readability.

- Faster Development Cycles:

- LLMs accelerate development by automating repetitive tasks.

- Instead of manually writing boilerplate code, developers can rely on LLMs to generate it swiftly.

- This speed boost allows teams to iterate faster and deliver software more efficiently.

- Enhanced Collaboration:

- LLMs act as collaborative coding companions.

- They bridge the gap between human intent and code execution, making teamwork smoother.

- Developers can discuss ideas with their LLM-powered sidekick, refining solutions together.

- Addressing Concerns:

- Model biases are a valid concern. Developers must be aware of potential biases in LLM-generated content.

- Regular audits, diverse training data, and fine-tuning can mitigate biases.

- Additionally, ethical guidelines ensure the responsible use of LLMs.

Real-World Scenarios Where Generative AI Benefits Developers

Let’s explore some real-world scenarios where generative AI for developers benefits. These use cases demonstrate how generative AI can transform development processes and enhance productivity:

- Rapid Prototyping:

- Generative AI helps developers quickly create prototypes for new features or applications.

- Generating code snippets, UI layouts, or mock data, accelerates the early stages of development.

- Imagine building a proof-of-concept app with minimal effort, thanks to generative AI.

- Code Refactoring:

- Refactoring existing code can be time-consuming and error-prone.

- Generative AI assists by suggesting cleaner, more efficient code alternatives.

- It identifies redundant code, optimizes performance, and adheres to best practices.

- Learning New Languages and Frameworks:

- When developers need to learn a new programming language or framework, Generative AI comes to the rescue.

- It generates sample code snippets, explanations, and documentation.

- Learning becomes more interactive and practical.

- Success Stories:

- Wendy’s: The fast-food chain used generative AI to create witty responses for their Twitter account, engaging customers and boosting brand visibility.

- CarMax: CarMax leveraged generative AI to streamline their vehicle appraisal process, improving accuracy and efficiency.

- Six Flags: The amusement park used AI to enhance ride safety inspections, ensuring a smoother experience for visitors.

- GE Appliances: Generative AI helped GE Appliances design innovative products and improve customer experiences.

Challenges and Future Directions With Generative AI

Here are the challenges and future directions related to generative AI:

Balancing Creativity with Best Practices

Challenge: Generative AI models can produce highly creative and novel content, but sometimes at the cost of adhering to established best practices. Striking the right balance between creativity and practicality is crucial.

Future Direction: Researchers and practitioners need to develop techniques that encourage creativity while ensuring outputs remain useful, reliable, and aligned with domain-specific guidelines.

Ethical Use of Generative AI

Challenge: As generative models become more powerful, there’s a risk of misuse. Ensuring ethical behavior, avoiding harmful content, and preventing biases are critical challenges.

Future Direction: Continued research on fairness, transparency, and interpretability will help address ethical concerns. Guidelines and policies should be established for responsible AI deployment.

Ongoing Research and Improvement

Challenge: Generative AI is a rapidly evolving field, and there’s always room for improvement. Models can be more efficient, robust, and capable of handling diverse tasks.

Future Direction: Researchers should focus on model architectures, training techniques, and evaluation metrics. Collaborative efforts across academia and industry will drive progress.

Get in touch with our OpenAI experts to know more!

A Dive into Cybersecurity

Magento 2 Custom Module Development