Trust Issues: Is the Ethical Use of Artificial Intelligence a Myth?

How do we learn to trust AI? We’ve started depending on Artificial Intelligence but as reliance grows, so does vulnerability. AI's influence is undeniable. But trust? That's a journey, not just a destination. Accenture's 2022 Tech Vision research cautions that only 35% of global consumers are sold on the integrity of AI's organizational use. And nearly 8 in 10 insist on accountability when AI steps out of line.

Can a robot behave ethically toward people, or is that hoping for a conscience amidst codes and algorithms? After all, AI's wisdom is as good as the data it feeds on. In other words, if you feed in a bias, it churns out a bias—it's that simple. As alarms sound over the ethical issues of using AI, we're left pondering: Is ethical AI an achievable standard or just a modern-day myth we're chasing? Let’s find out!

What is AI Ethics?

What are ethical values in AI all about? AI ethics is about understanding and aligning technology with our collective human conscience. It's a delicate balance, ensuring the machines we build and train respect our societal norms and values. These ethics guard the interface between our digital creations and what we as a society stand for.

Why AI ethics is Important?

Embedding ethics in AI is akin to blending empathy into software—it's about making sure our creations do more than just function; they must:

- Reflect our principles

- Adapt to our needs

- Anticipate the nuances of our interactions.

When that level of care is infused into AI, it becomes a technology that isn't just powerful, but also wise and kind, supporting our well-being and upholding our dignity.

Just as empathy in software design ensures a product genuinely resonates with users, ethics in AI is what makes us believe, "Yes, this technology acts in our best interest." It's not just about smart machines; it's about wise, considerate, and ethically-minded AI assistants.

Who’s At Fault When AI Makes an Error?

When AI goes wrong, who's to blame? Since it’s a collaborative effort—Developers design the algorithms, data suppliers fuel the system, and users rely on its insights—determining accountability for AI-induced errors is a shared responsibility. Nevertheless, the challenge is clear – if we can't clarify who's at fault when AI makes an error, trust in AI will falter.

Finding answers to these accountability questions is not just about avoiding blame—it's about building a solid foundation of trust as we step boldly into the future with AI.

Ethical Issues Surrounding AI

AI systems can lead to serious ethical issues and problems if not designed and implemented responsibly. Some concerning consequences regarding ethical issues with AI include

- Increasing Inequality: AI algorithms that exhibit bias or discrimination can worsen existing inequalities in areas like education, healthcare, finance, and democratic processes by unfairly disadvantaging certain groups.

- Prolonged Legal Battles: Unethical AI practices could spark social resistance and extended litigation by impacted communities seeking justice.

- Profiling and Bias: AI algorithms showing bias against particular races, genders, or other demographics can perpetuate harmful stereotyping and discrimination.

- Malicious Misuse: AI technologies can be misused to fake data, steal credentials, disrupt systems, and undermine public trust in technology.

- Violating Core Values: Such unethical AI deployments put fundamental human values like privacy, data protection, fairness, and autonomy at grave risk.

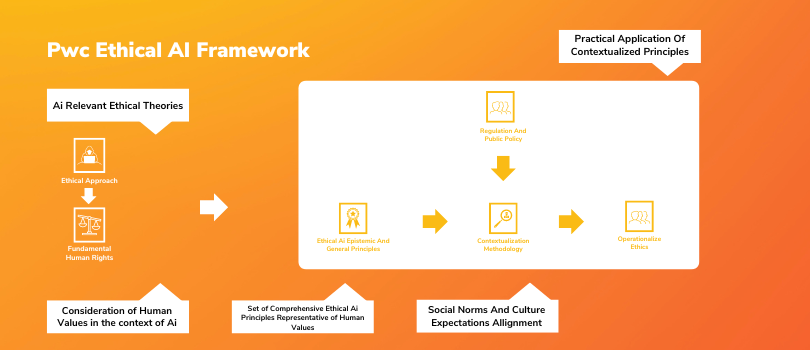

Existing Frameworks Addressing AI Ethics

To mitigate these ethical risks, several AI ethical frameworks and initiatives have emerged to provide guidelines and governance for ethical AI development and use:

- IEEE Global AI Ethics Initiative: This offers standards, training, certifications, and resources to empower ethical AI design, development, and deployment.

- FAT ML Principles: Focused on enabling fairness, accountability, and transparency in machine learning algorithms.

- EU Trustworthy AI Guidelines: EU guidelines trustworthy AI present Europe's approach and principles for ethics in artificial intelligence systems.

- Asilomar AI Principles: One of the earliest and most influential frameworks outlining guidelines for beneficial AI.

- Universal Guidelines for AI: An initiative by the Future of Life Institute to develop a global ethics framework for AI systems.

The goal of these frameworks is to raise awareness and establish processes to ensure AI adheres to ethical principles and benefits humanity as a whole.

How to Develop AI Solutions That Prioritize Safety and Instill Confidence?

“80% of companies plan to increase investment in Responsible AI, and 77% see regulation of AI as a priority.”

1. Foster Positive Relationships

Building trust in AI solution development relies on fostering positive relationships characterized by fairness and transparency. The challenge is to bring those same relationship principles to how people interact with AI technologies.

- Explainable AI: AI systems should provide clear explanations for their decisions so humans understand the rationale behind each outcome.

- Algorithmic Fairness: Identifying and mitigating bias in AI algorithms from the start is crucial to preventing unfair or discriminatory consequences.

- Human-Machine Collaboration: Combining human ingenuity with AI's capabilities creates a powerful synergy that exceeds what either can achieve alone.

2. Emphasize Good Judgment and Expertise

Just as we expect leaders to be well-informed, trustworthy AI systems must demonstrate good judgment by acknowledging uncertainty and continuously expanding their knowledge.

- Evaluating Algorithmic Uncertainty: Algorithmic trading with model uncertainty is common. After all, all algorithms have some degree of uncertainty in their outputs. Accounting for this uncertainty can reduce risks and improve outcomes.

- Continuous Learning: While humans are lifelong learners, AI technologies can similarly be designed to continually enhance their knowledge and capabilities over time.

3. Ensure Consistency

Trustworthy leaders follow through on their commitments. Similarly, people expect AI systems to perform consistently as promised while ensuring privacy and security.

- Robust and Resilient: AI solutions must maintain their intended functionality even when faced with adversarial attacks or attempts to compromise them.

- Private and Secure: These systems must manage data in a manner that aligns with users' expectations of privacy and adheres to robust security standards.

How to Apply AI Ethics

“Most companies (69%) have started implementing Responsible AI practices, but only 6% have operationalized their capabilities to be responsible by design.”

Ethical guidelines and standards are being set, essential for both the private sector and public governance.

1. Cultivating Awareness and Dialogue

- The understanding of AI ethics should be universal - a goal for governments, corporations, and individuals alike.

- Initiating widespread conversations and educational campaigns is crucial, involving a variety of stakeholders to foster a collective commitment to ethical AI practices.

2. Embracing Universal Ethical Principles

Countries and companies are crafting codes of ethics, and foundation principles that, while not legally binding, shape the conscience of AI. These principles include:

- Transparency: AI should be an open book, with clear data usage and the latest in security measures.

- Non-maleficence and Trust: Above all, do not harm—technology must prioritize safety and readiness for human intervention.

- Equitability and Fairness: AI must respect and adhere to human rights, reflecting our commitment to justice.

- Autonomy and Accountability: Our digital counterparts should be transparent about their roles and their makers.

- Explainability: Understanding AI's decision-making is crucial for accountability and social trust.

3. Establishing a Legal Framework for Ethics in AI

Non-mandatory ethical AI frameworks lead the way, but a legal foundation is paramount for lasting change. A standard approach to ethical standards within AI could significantly bolster this journey towards a legally grounded ethical AI environment.

4. Drafting Sector-Specific AI Guidelines

The use of AI isn't one-size-fits-all. After all, legal and ethical issues in artificial intelligence are different. Tailored guidelines for sectors like healthcare, education, and e-commerce are required to ensure ethical deployments that resonate with the specific needs and challenges of each domain.

5. Ensuring Ongoing Transparency with Evolving Data Use

With the rapid technological pace, especially in AI, legislation struggles to keep up. Ongoing and practical discussions about the ethical use of evolving AI technologies are vital for a sustainable data-driven transformation.

Practical implementation of AI ethics

True ethical AI requires a seamless integration of ethical principles throughout the AI lifecycle, from concept to deployment and beyond. This means embedding ethical considerations into AI frameworks early in their design, managing data with integrity, monitoring AI behavior post-deployment, and establishing accountability mechanisms. Clear communication and understandable interactions with AI systems are necessary to maintain the trust and reliability essential for continued use.

AI Ethics in the Real World

Pioneering companies like Google, Microsoft, and IBM have set forth principles and frameworks to guide ethical AI use. These range from fostering social good to responsible technological governance and continuous trust-building measures.

- Introduced in 2018 for ethical AI development across products and services.

- Aimed at ensuring social benefits in healthcare, security, energy, transportation, manufacturing, and entertainment.

- Involves education, ethical reviews, and tool development, with collaboration across NGOs, industry partners, and experts.

- Based on six principles: accountability, inclusiveness, reliability and safety, fairness, transparency, privacy, and security.

- Guides the design, building, and testing of AI models with an eye on responsibility.

- Implements proactive measures to address AI risks and reinforces oversight to manage potential negative impacts on society.

IBM’s Trustworthy AI:

- Renowned for prioritizing ethical AI usage, with a developed Responsible Use of Technology framework.

- Focuses on continuous AI model monitoring and validation for stakeholder trust.

- Stresses the importance of reliability in data, models, and overall AI processes.

Anticipating the Future of AI Ethics

As AI matures, emerging ethical concerns will undoubtedly challenge existing norms. Whether it involves the sophistication of deepfakes, implications for safety in autonomous vehicles, or the consequences of AI on labor markets, these issues require attention and action. Moreover, the growing autonomy of AI could provoke debates about legal status and rights, highlighting the necessity for proactive ethical leadership in AI development and application.

Ethical AI isn't solely about immediate challenges; it's about creating an informed global community through education, specialized training, and public dialogue. Fostering an inclusive, equitable dialogue on AI ethics ensures these principles are woven into the fabric of our technological future, championing a fair and responsible digital landscape. To create responsible user-centric AI experiences that are intuitive yet business-minded, get in touch with our OpenAI experts today!

Developing Leadership Skills and Taking Accountability: How-to Guide

Experience of Organizing Hackathons at SJInnovation